|

I am currently a Ph.D. student in Dept. of Automation, Tsinghua University advised by Prof. Song-Chun Zhu. I am also a research intern in General Vision Lab at Beijing Institute for General Artificial Intelligence (BIGAI), and I am grateful to be advised by Dr. Tengyu Liu and Dr. Siyuan Huang. Previously, I obtained my B.Eng. degree from Tsinghua University in 2023. My research interests lie in the intersection of robotics manipulation and 3D computer vision. My long-term goal is to develop embodied intelligent systems capable of interpreting human intent and naturally interacting with people in various environments, learning reusable and endless low-level skill sets and high-level common sense. Currently, I am working on 3D scene understanding and robotic manipulation learning, pushing the boundaries of how robots operate within complex settings. Email / CV / Google Scholar / Github / Twitter |

|

|

|

|

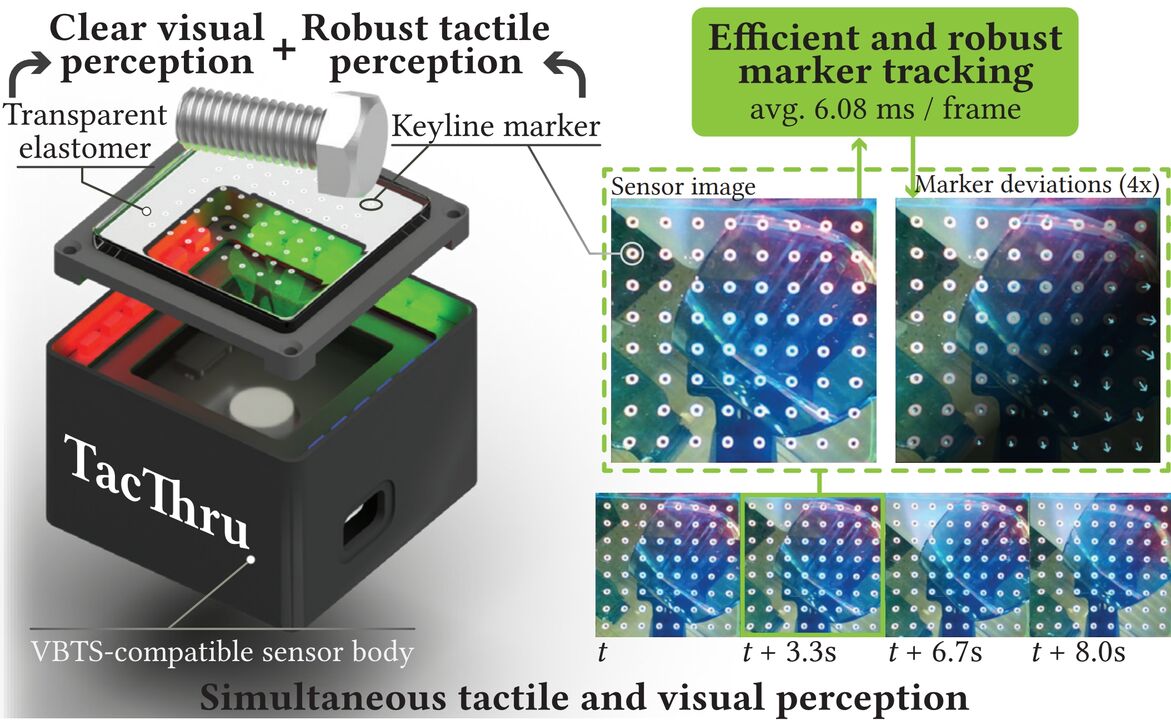

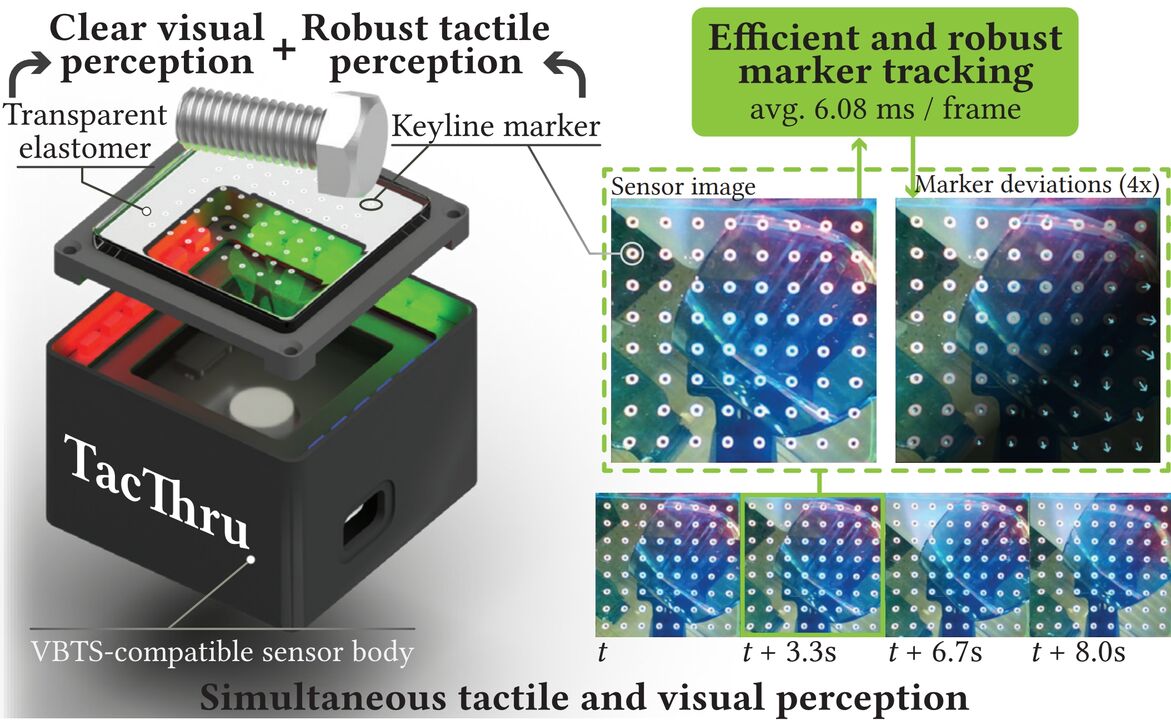

Yuyang Li*, Yinghan Chen*, Zihang Zhao, Puhao Li, Tengyu Liu, Siyuan Huang, Yixin Zhu arXiv 2025 [Paper] [Code] [Data] [Hardware] [Project Page] |

|

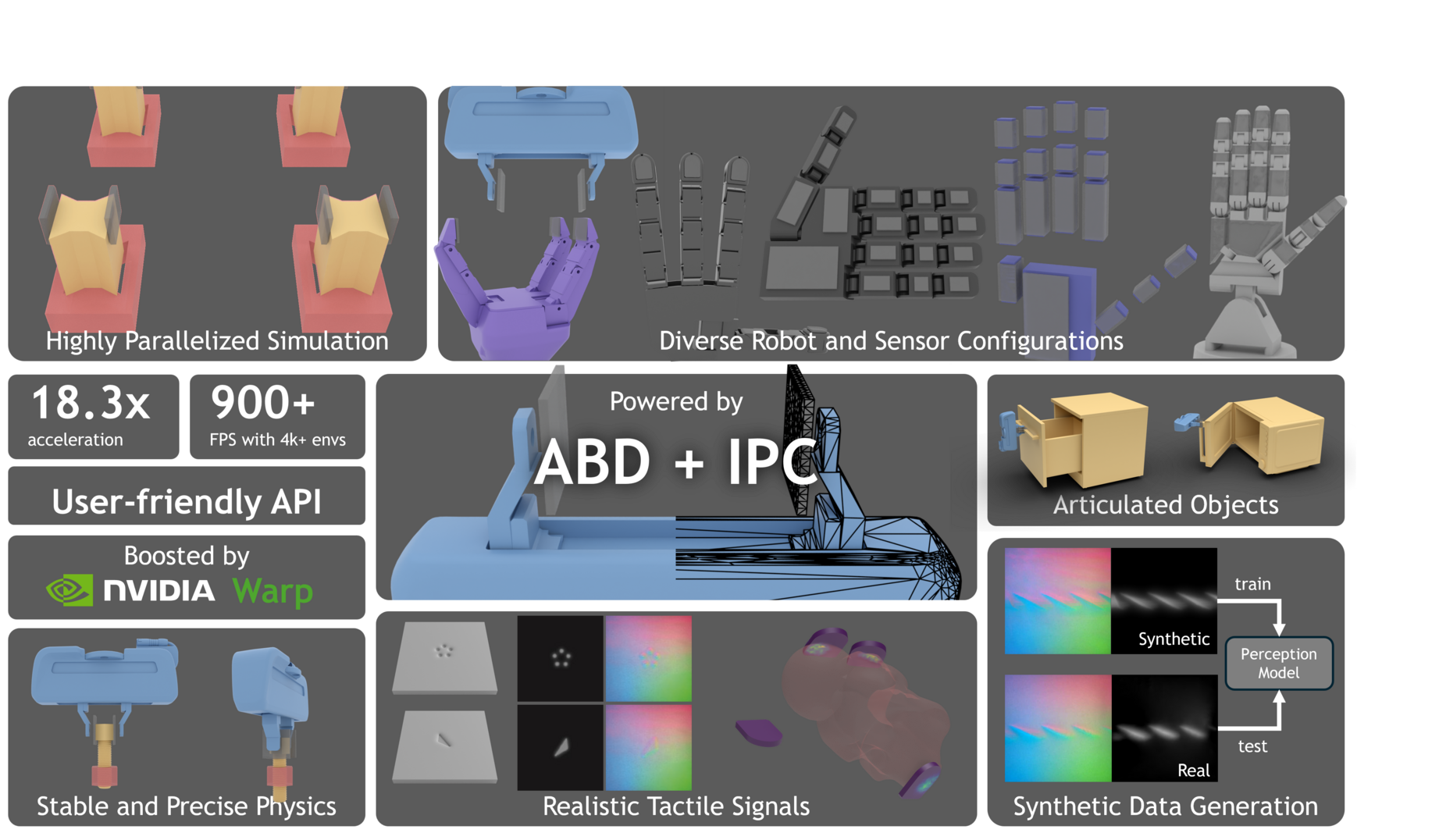

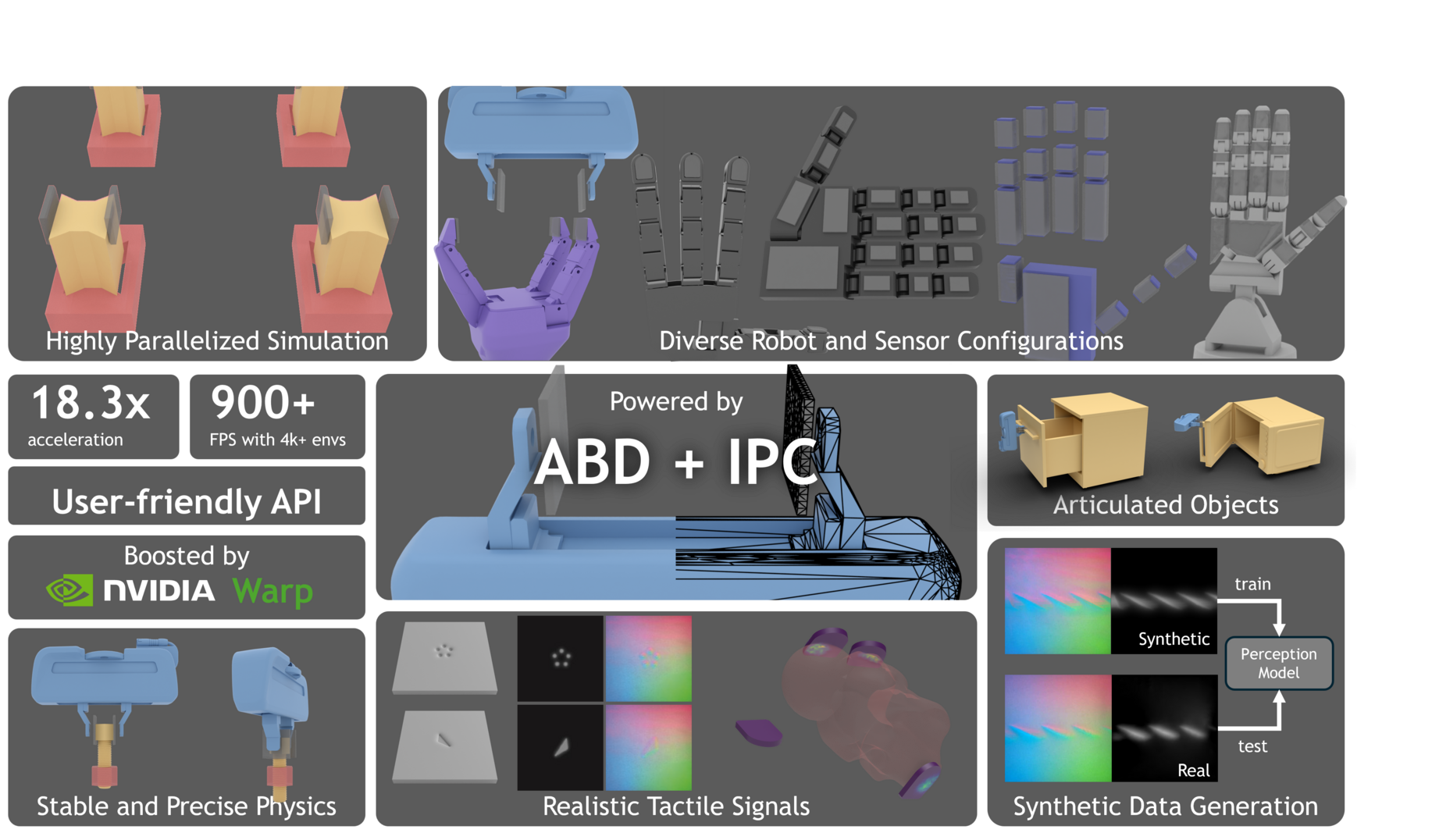

Yuyang Li*, Wenxin Du*, Chang Yu*, Puhao Li, Zihang Zhao, Tengyu Liu, Chenfanfu Jiang, Yixin Zhu, Siyuan Huang NeurIPS 2025 (Spotlight) [Paper] [Code] [Docs] [NVIDIA Tech Blog] |

|

Puhao Li, Yingying Wu, Ziheng Xi, Wanlin Li, Yuzhe Huang, Zhiyuan Zhang, Yinghan Chen, Jianan Wang, Song-Chun Zhu, Tengyu Liu, Siyuan Huang CoRL 2025 [Paper] [Code] [Project Page] |

|

Guanxing Lu*, Baoxiong Jia*, Puhao Li*, Yixin Chen, Ziwei Wang, Yansong Tang, Siyuan Huang, ICCV 2025 [Paper] [Code] [Project Page] |

|

Ziyin Xiong*, Yinghan Chen*, Puhao Li, Yixin Zhu, Tengyu Liu, Siyuan Huang, IROS 2025 [Paper] [Code] [Project Page] |

|

Kailin Li, Puhao Li, Tengyu Liu, Yuyang Li, Siyuan Huang CVPR 2025 [Paper] [Code] [Data] [Project Page] |

|

Huangyue Yu*, Baoxiong Jia*, Yixin Chen*, Yandan Yang, Puhao Li, Rongpeng Su, Jiaxin Li, Qing Li, Wei Liang, Song-Chun Zhu, Tengyu Liu, Siyuan Huang CVPR 2025 [Paper] [Code] [Data] [Project Page] |

|

ICRA 2025 [Paper] [Project Page] |

|

Junfeng Ni*, Yixin Chen*, Bohan Jing, Nan Jiang, Bing Wang, Bo Dai, Puhao Li, Yixin Zhu, Song-Chun Zhu, Siyuan Huang NeurlPS 2024 [Paper] [Code] [Project Page] |

|

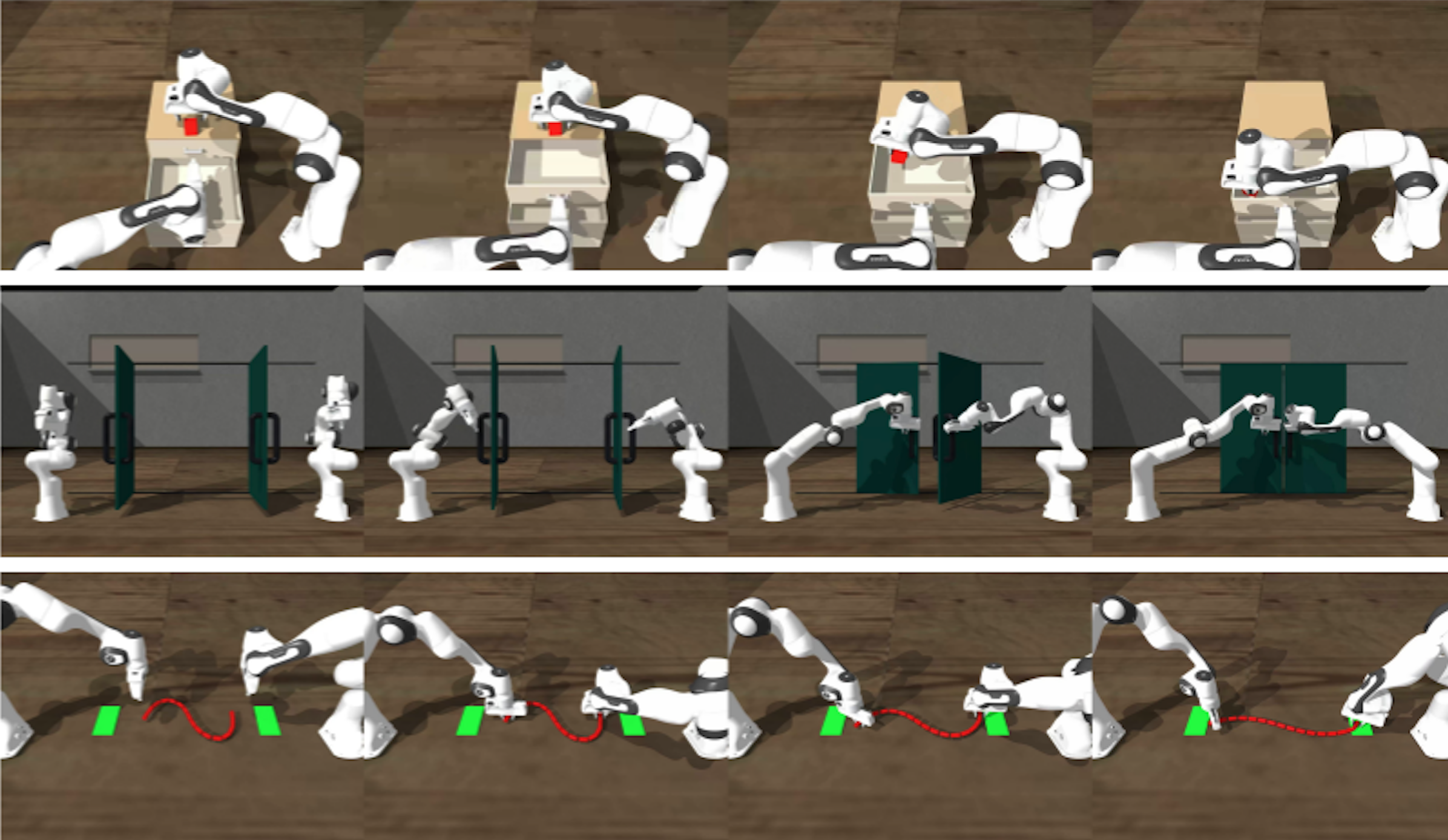

Puhao Li*, Tengyu Liu*, Yuyang Li, Muzhi Han, Haoran Geng, Shu Wang, Yixin Zhu, Song-Chun Zhu, Siyuan Huang IROS 2024 (Oral Pitch) [Paper] [Code] [Project Page] |

|

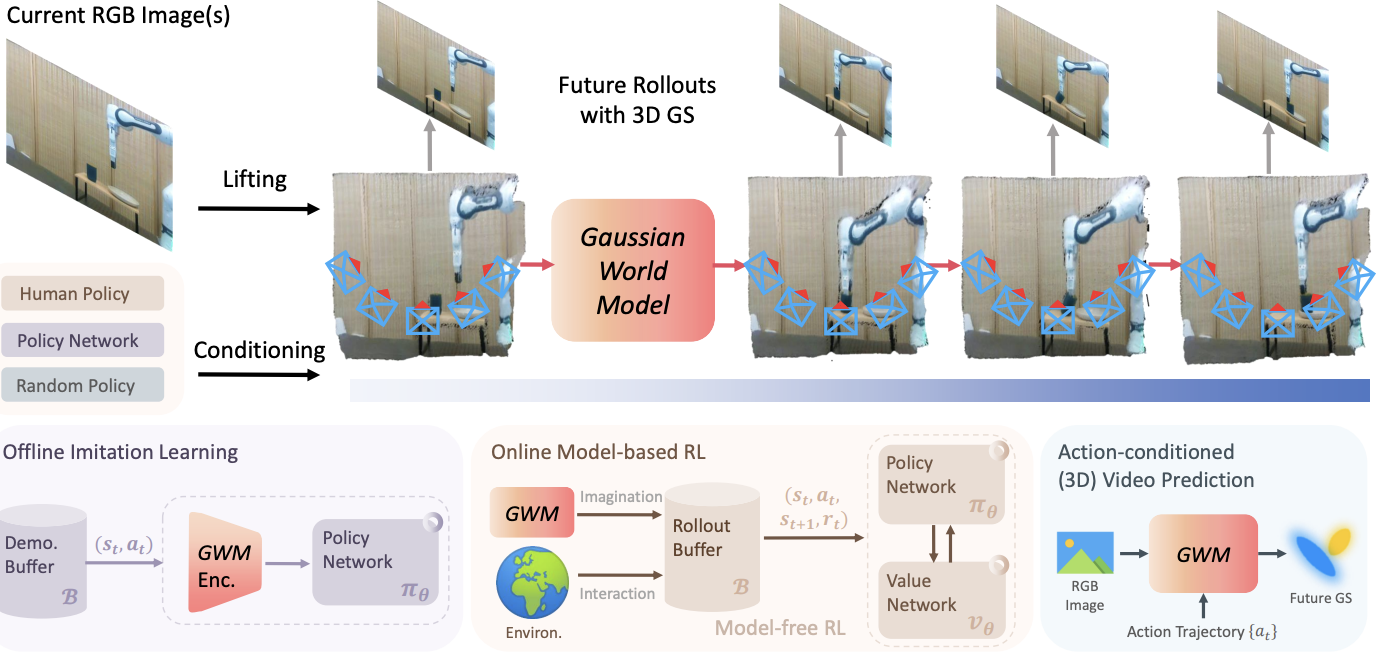

Yuyang Li, Bo Liu, Yiran Geng, Puhao Li, Yaodong Yang, Yixin Zhu, Tengyu Liu, Siyuan Huang RA-L, presented at IROS 2024 (Oral Presentation) [Paper] [Code] [Data] [Project Page] |

|

Zan Wang, Yixin Chen, Baoxiong Jia, Puhao Li, Jinlu Zhang, Jingze Zhang, Tengyu Liu, Yixin Zhu, Wei Liang, Siyuan Huang CVPR 2024 (Highlight) [Paper] [Code] [Project Page] |

|

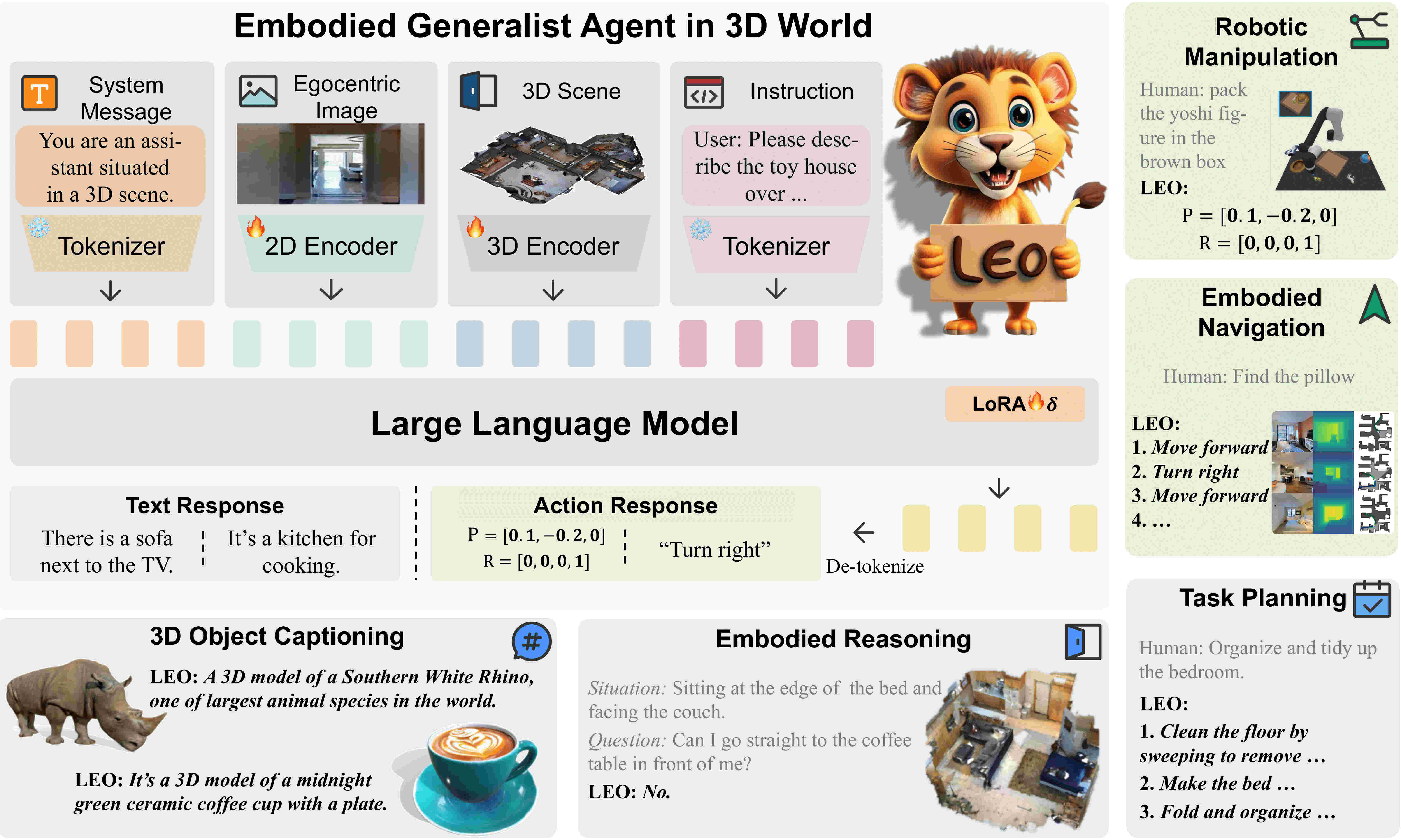

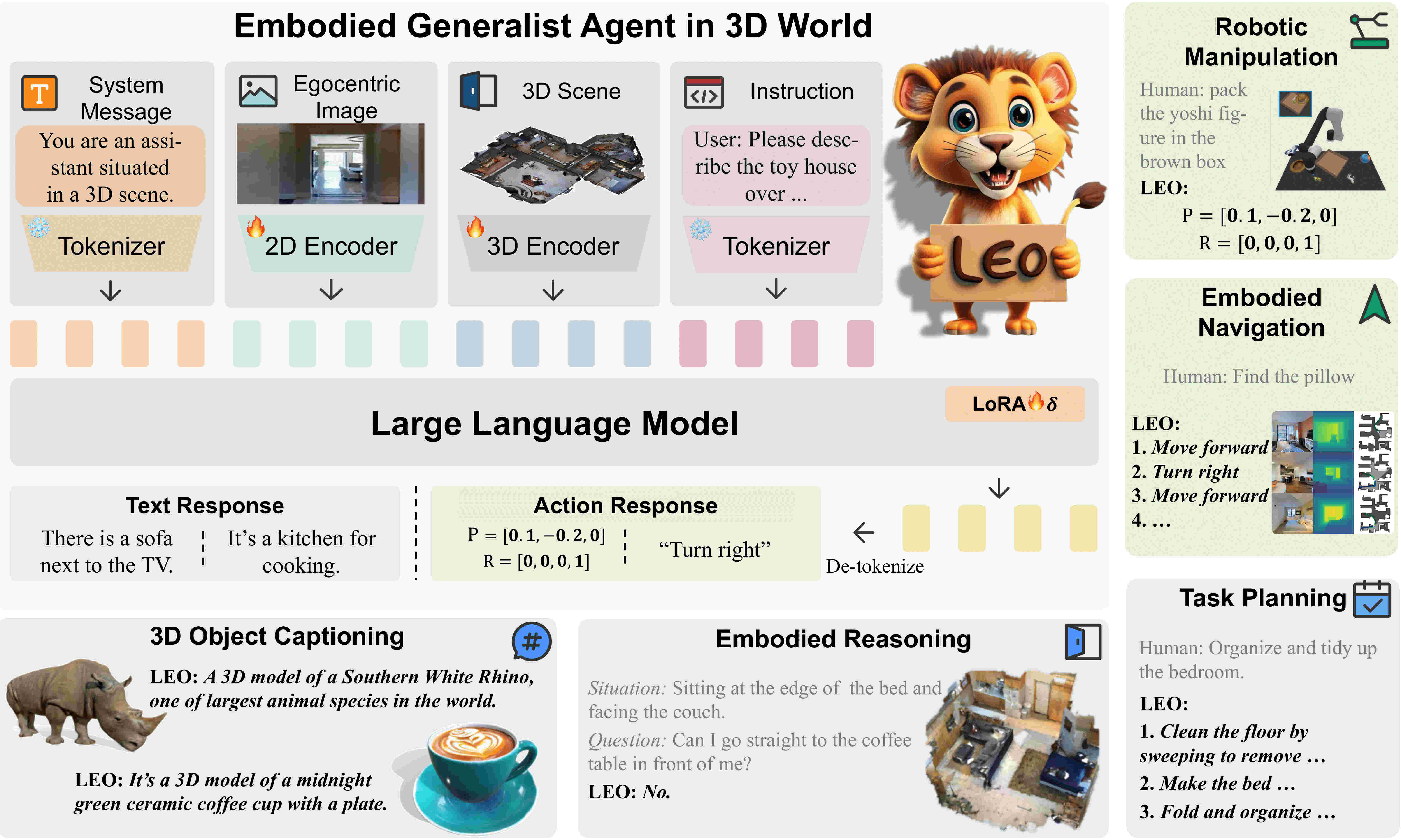

Jiangyong Huang*, Silong Yong*, Xiaojian Ma*, Xiongkun Linghu*, Puhao Li, Yan Wang, Qing Li, Song-Chun Zhu, Baoxiong Jia, Siyuan Huang ICML 2024 ICLR 2024 @ LLMAgents Workshop [Paper] [Code] [Data] [Project Page] |

|

Siyuan Huang*, Zan Wang*, Puhao Li, Baoxiong Jia, Tengyu Liu, Yixin Zhu, Wei Liang, Song-Chun Zhu CVPR 2023 [Paper] [Code] [Project Page] [Hugging Face] |

|

Puhao Li*, Tengyu Liu*, Yuyang Li, Yiran Geng, Yixin Zhu, Yaodong Yang, Siyuan Huang ICRA 2023 [Paper] [Code] [Data] [Project Page] |

|

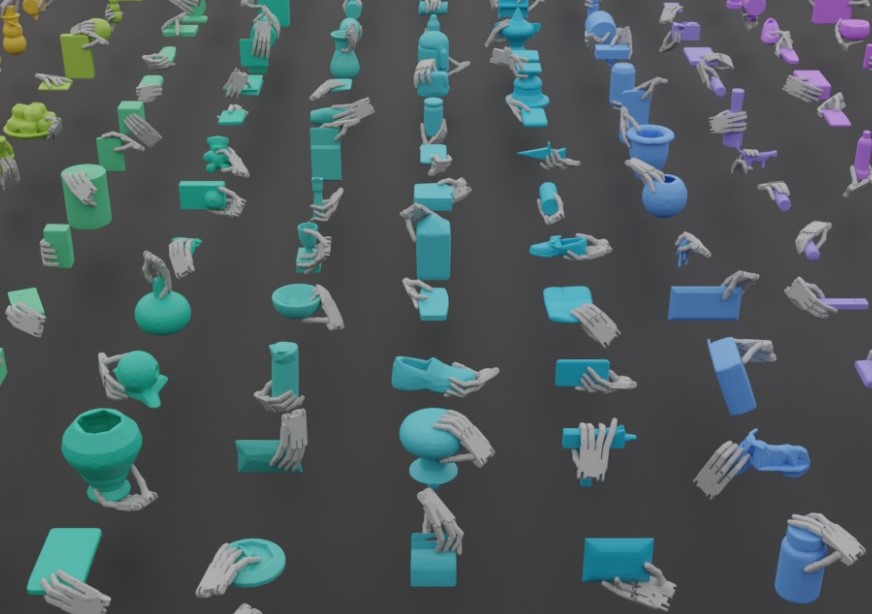

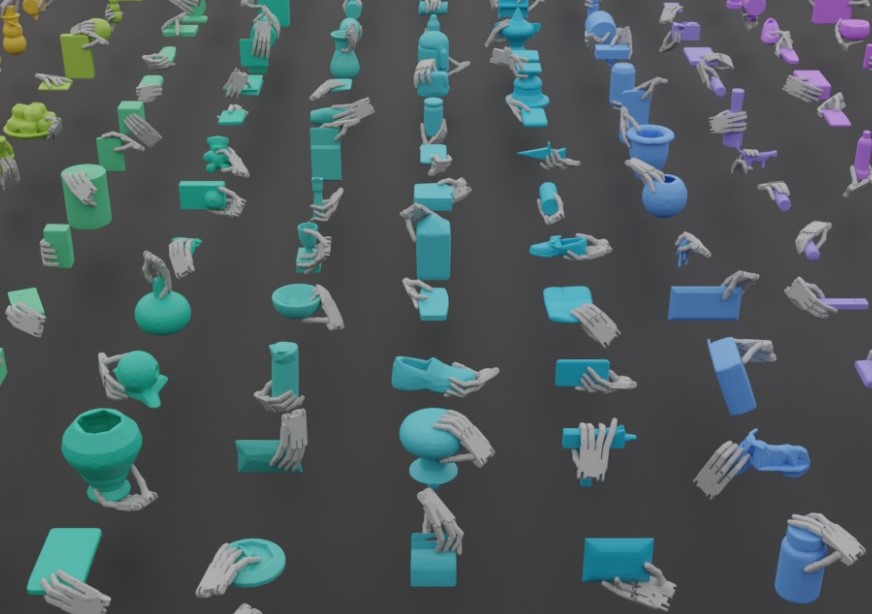

Ruicheng Wang*, Jialiang Zhang*, Jiayi Chen, Yinzhen Xu, Puhao Li, Tengyu Liu, He Wang ICRA 2023 (Oral Presentation, Outstanding Manipulation Paper Finalist) [Paper] [Code] [Data] [Project Page] |

|

|

|

Tsinghua University, China

2023.09 - present Ph.D. Student Advisor: Prof. Song-Chun Zhu |

|

|

Beijing Institute for General Artificial Intelligence (BIGAI), China

2021.09 - present Research Intern Advisor: Dr. Tengyu Liu and Dr. Siyuan Huang |

|

Tsinghua University, China

2019.08 - 2023.06 Undergraduate Student |

|

Fell free to contact me if you have any problem. Thanks for your visiting by 😊

|